Spotlight: USystems and DataBank deliver next gen cooling

As the data centre sector continues to innovate in terms of rack capacity, operators are experiencing increased pain points as industry standard cooling solutions struggle to keep pace with increased demands.

“Data Centre rack capacities of around 4-6kW are becoming less prominent, with kW duties creeping towards 12-15kW. For true high performance computing (HPC), kW duties of 40kW and above are being requested,” writes Gary Tinkler, Global Commercial Director at Usystems in a new whitepaper on the future of cooling in the data centre industry shared with Data Centre Magazine.

ATL1, a leading-edge HPC data centre in the heart of Georgia Tech - Image Courtesy of USystems

He adds that, for the most part, data centre operators are now being forced to cope with these kW increases via bolt-on components like additional vent tiles, aisle containment and in-row chillers, something he notes is not a sustainable solution.

These bolt-on components can significantly impact efficient data centre architecture, as cold aisles get wider and cooling equipment takes up more and more valuable space. “Space in a data center equals revenue,” he explains. “Therefore, reducing this space has a serious impact on the revenue and utilisation of real estate for the business.”

The fact that, for the past 30 years, cooling “has not been a subject of great innovation” is becoming an increasingly thorny issue for operators. USystems believes it has part of the answer to this puzzle, a solution which it demonstrated as part of a recent project with DataBank and Georgia Tech.

The project involved DataBank constructing a purpose-built data centre for Georgia Tech in Atlanta. The facility, ATL1, is located in the CODA building and is one of the most advanced data centres in the US, partly due to the unique demands placed upon it by the Georgia Tech Supercomputer. ATL1 will be used to support data-driven research in astrophysics, computational biology, health sciences, computational chemistry, materials and manufacturing, and more - including the study of efficiency in HPC systems.

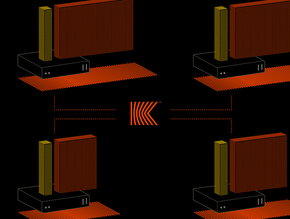

ATL1 is also a site of data centre cooling innovation, courtesy of ATL1’s new ColdLogik rear door cooling system. The solution uses waste energy emitted from servers in a cabinet, exchanging it by heating naturally cold water that runs through a closed-loop system in each cabinet’s rear door. The water is then heated by the cabinet air, which pushes it out of the facility as new cold water is cycled back in, keeping cabinets continuously cool far more efficiently than traditional CRAC/H cooling techniques.

The ColdLogik system, according to USystems, consumes 90% less energy, while giving DataBank access to 80% more floor space than traditional cooling solutions.

“Traditional data centres are these big, heavy, clunky facilities. If they’re not at capacity then they can be running at PUEs in the 2s or 3s. It’s terrible,” said Brandon Peccoralo, general manager, Atlanta, at DataBank in a recent episode of the Data Centre Cooldown podcast. “With the liquid cooling rear door solution, I can run each individual cabinet at a PUE of lower than 1.2, and that's amazing.”